Related Posts

Company Profile

Dec 24

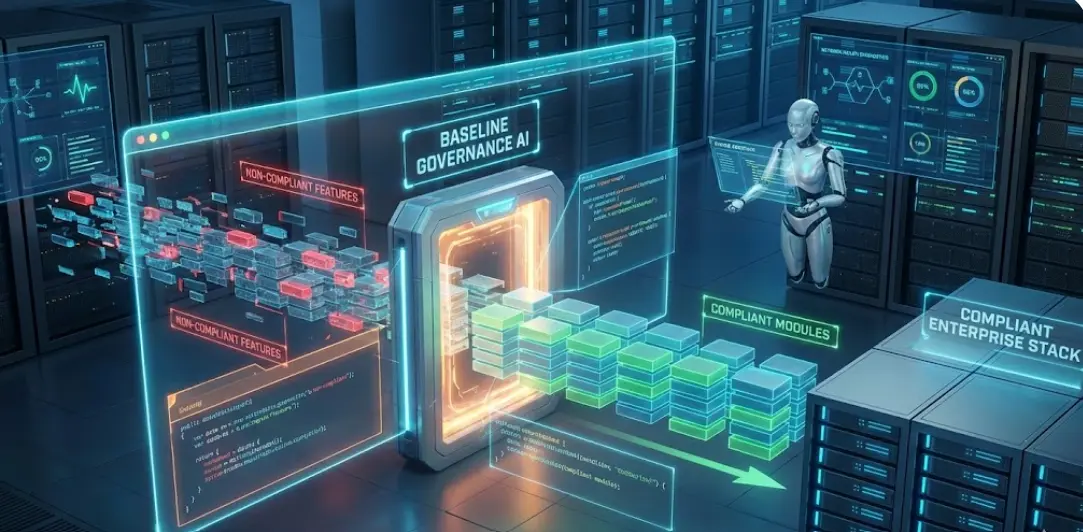

Automating Web Governance: Integrating AI Agents and Static Analysis for Enterprise Compliance

New winners from the Baseline Hackathon, including 'eslint-plugin-baseline-js' and 'baseline-mcp', are redefining web governance. We explore how CTOs can use these tools to automate browser compatibility checks and integrate safety guardrails into AI coding workflows.